In the blog post Call a Fabric REST API from Azure Data Factory I explained how you can call a Fabric REST API endpoint from Azure Data Factory (or Synapse if you will). Let’s go a step further and execute a Fabric Data Pipeline from an ADF pipeline, which is a common request. A Fabric capacity cannot auto-resume, so you typically have an ADF pipeline that starts the Fabric capacity. After the capacity is started, you want to kick-off your ETL pipelines in Fabric and now you can do this from ADF as well.

Prerequisites

You obviously need an ADF instance and a Fabric-enabled workspace in the Fabric/Power BI service. In Fabric, I created a very simple pipeline with a Wait activity:

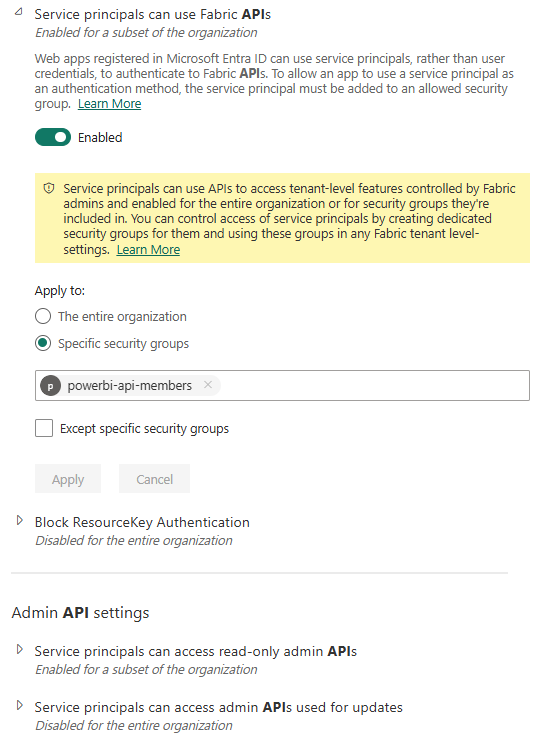

To authenticate with the Fabric REST API, we need either a service principal or the managed identity of the ADF instance (the last one is preferred as it doesn’t have a secret that can expire). You then need to create a security group in Azure Entra ID and add the SP or the managed identity to that group. In the Fabric Admin portal, you need to enable the Fabric REST APIs and add the security group.

Once that’s done, you can add the SP or the managed identity to your workspace as a contributor.

Trigger the Pipeline from ADF

The endpoint that we’re going to use is Run on demand pipeline job. It has the following URL:

https://api.fabric.microsoft.com/v1/workspaces/{workspaceId}/items/{itemId}/jobs/instances?jobType=Refresh

We need two parameters:

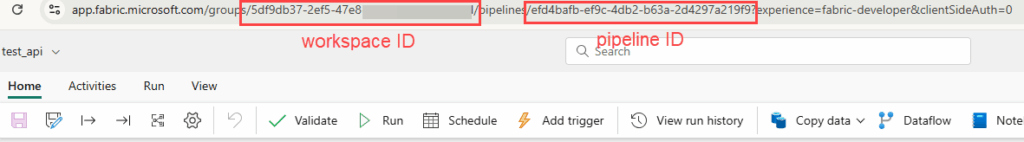

- the workspace ID

- the ID of the Data Pipeline in Fabric (unfortunately it’s not possible to trigger a pipeline by name)

Both can be found in the URL when you have the pipeline open in the browser:

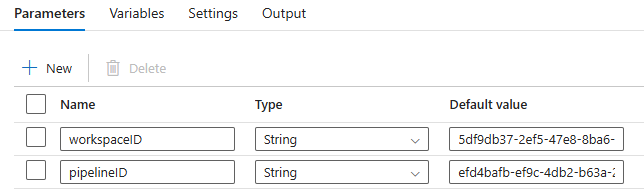

I added both as a parameter of my ADF pipeline:

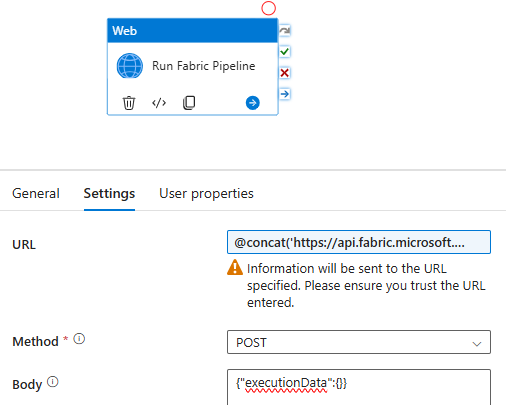

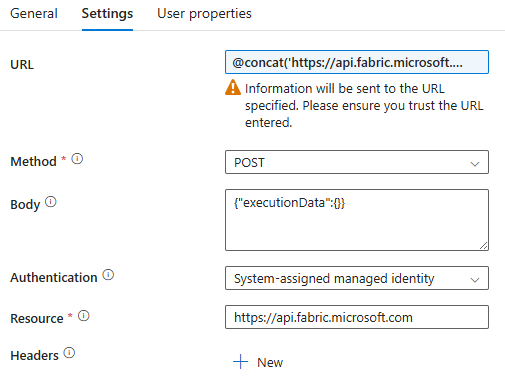

To call the Fabric REST API we will use a Web activity. For the URL I used the following expression:

@concat('https://api.fabric.microsoft.com/v1/workspaces/',pipeline().parameters.workspaceID,'/items/',pipeline().parameters.pipelineID,'/jobs/instances?jobType=Pipeline')

The method is set to POST, and I used the following body:

{"executionData":{}}

However, if you need to pass along parameter values to the Fabric pipeline, you can do that in the JSON body. You can find an example here.

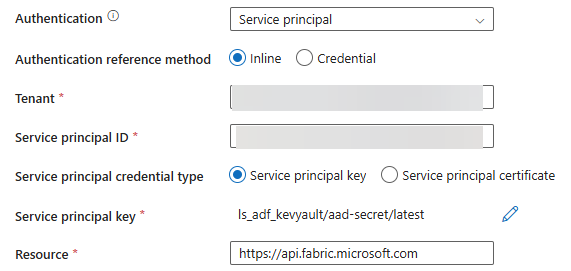

If you use service principal authentication, you’ll need the app ID of the SP, and the secret (which you stored in Azure Key Vault of course):

If you use the managed identity of ADF, the configuration is a bit easier:

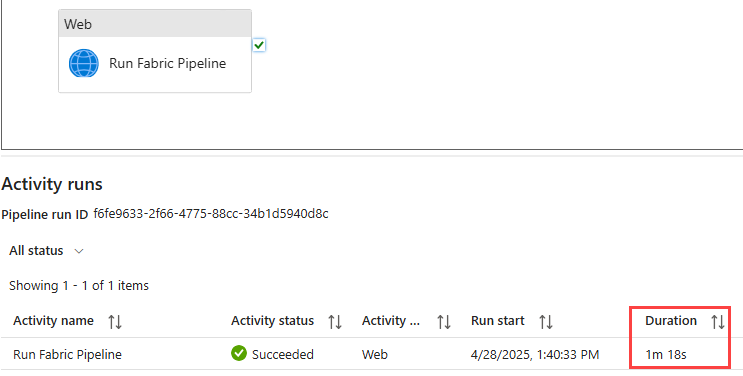

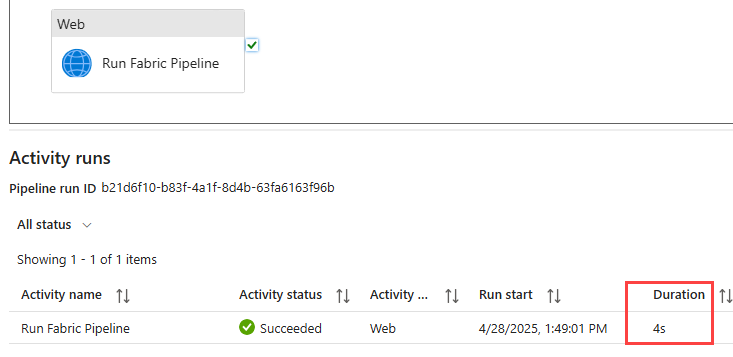

Once the config is done, you can debug the ADF pipeline. The default behavior is to call the REST API synchronously, which means the Web activity will run for the entire duration the Fabric pipeline is running.

You can verify the Fabric pipeline was triggered in the Fabric monitoring view:

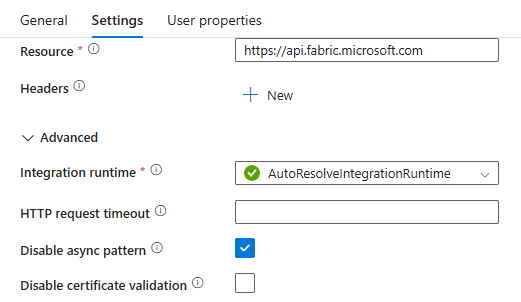

If you don’t want a synchronous execution (a running activity in ADF costs money), you can select the Disable async pattern option in the advanced settings (which is a really confusing name).

The pipeline will then finish almost instantly. However, it will always succeed, even if the Fabric Data Pipeline fails.

I’m quite happy with this new functionality as it makes working with Fabric a bit easier and more mature.

The post Execute Fabric Data Pipeline from Azure Data Factory first appeared on Under the kover of business intelligence.