I saw some good reviews of the small gemma3 model in a few places and wanted to try it locally. This

If you want to get started, read my post on setting up a Local LLM. This post gives an alternative to connecting to the container from the CLI and running a command.

This is part of a series of experiments with AI systems.

Adding a Model

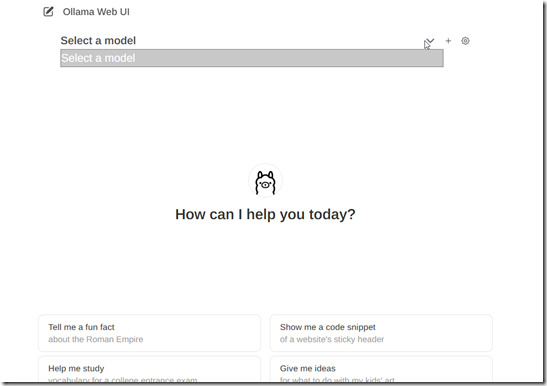

Using the Ollama-WebUI server, it’s easy to add models. I wrote about setting up the UI and I’m running that as my interface to the local model. I created a new container for this post, so when I see the interface, there are no models to choose from. You can see this below.

I need a model because if I try to ask a question, I get an error that the model is not selected. You can see the error at the top in this image and the query at the bottom.

If I click the gear icon by the model drop down, I get a list of settings. One of these is “Models”, which is third on the left.

I click Models and get this list. As you can see I have no models. Notice I need a model name to enter. However, where the cursor is below, there is a “click here” for a list of models.

This opens the Ollama site at https://ollama.com/library

If I scroll down, I see the gemma3 model. If I click this I see a few different ones. I am not a big fan of latest, but this is a test. I decided to just grab it since I’m not programming things.

If I enter this in my WebUI and click download, it starts downloading.

Progress is shown, and it’s not quick. I went on to other work and this is the view about 15 minutes later.

Once this is complete, I close settings and see my model in the list. Since this is the only model for this container, I’ll click “set as default”.

Now I can get my fun fact:

Now I have a safe, secure, local model to use. If you want to see this run in real-time, check out this video: