✨ Key Points

- AGI is expected around the 2040s, with some predictions as early as 2025.

- ASI is predicted to follow shortly after AGI, likely by the mid-2040s to early 2050s.

AGI Timeline

✨ Artificial General Intelligence (AGI) is AI that can perform any intellectual task a human can, at human level.

Based on expert surveys and predictions, AGI is anticipated to be achieved around the 2040s.

For example, the AI Impacts 2023 survey, with 2,778 AI researchers, suggests a median timeline of 2047 for high-level machine intelligence, which aligns with AGI in some definitions.

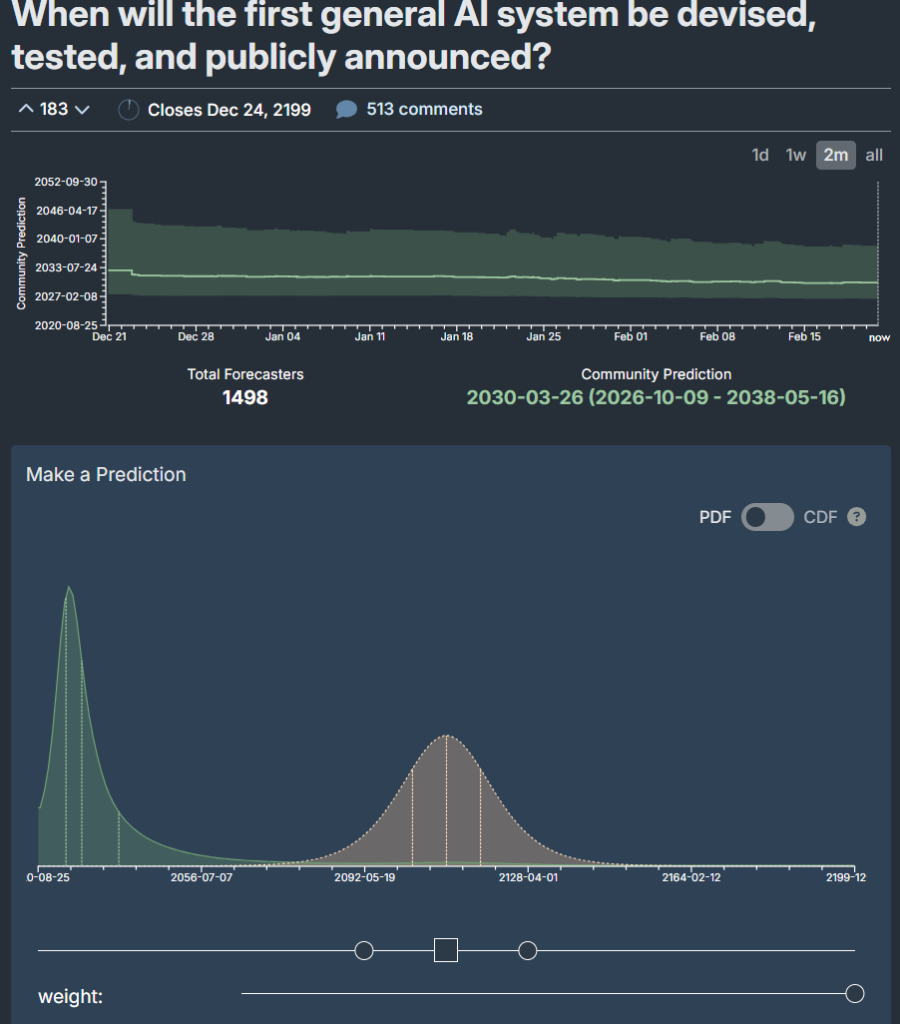

However, community predictions on Metaculus estimate it could be as early as 2030 (Metaculus Prediction).

ASI Timeline

✨ Artificial Super Intelligence (ASI) is AI that surpasses human intelligence in all areas.

It’s expected to follow AGI closely, potentially within a few years, due to the concept of an intelligence explosion where AI rapidly self-improves.

Predictions vary, with Elon Musk suggesting AI could surpass human intelligence by 2025 or 2026 (Elon Musk’s Prediction), and Masayoshi Son predicting ASI around 2034. More conservative estimates, like the AI Impacts survey, place it around 2047.

It’s surprising that some experts, like Elon Musk, predict AI surpassing human intelligence as early as 2025, which is just next year, given the complexity and current state of AI technology.

Detailed Analysis of Predictions for AGI and ASI Timelines

This section provides a comprehensive analysis of predictions for Artificial General Intelligence (AGI) and Artificial Super Intelligence (ASI), drawing from expert surveys, individual predictions, and theoretical frameworks. The analysis aims to capture the range of opinions and the methodologies behind these predictions, offering a detailed understanding for those interested in the future of AI development.

Definitions and Context

- AGI: Defined as AI capable of performing any intellectual task that a human can, at human level. This is often equated with human-level machine intelligence in surveys.

- ASI: Defined as AI that surpasses human intelligence in all areas, potentially leading to an “intelligence explosion” where AI rapidly self-improves beyond human control.

The distinction between AGI and ASI is crucial, as AGI represents a machine at human-level intelligence, while ASI is superhuman, capable of outperforming humans in every cognitive domain. The timeline from AGI to ASI is often hypothesized to be short due to recursive self-improvement, but predictions vary widely.

Survey Data and Expert Opinions

Several surveys and studies provide insights into expected timelines, with varying definitions and methodologies:

AI Impacts Surveys:

The 2016, 2022, and 2023 Expert Surveys on Progress in AI by AI Impacts involved large numbers of machine learning researchers. The 2023 survey, with 2,778 respondents, defined “High-Level Machine Intelligence” (HLMI) as when unaided machines can accomplish every task better and more cheaply than human workers, which aligns with ASI. The median prediction for a 50% chance of HLMI was 2047 (AI Impacts Survey 2023).

In the 2016 survey, the median year for a 50% chance of HLMI was 2040, while “Full Automation of Labor” (FAOL), defined as AI doing all jobs as well as humans at the same cost, had a median of 2090. This discrepancy suggests potential misinterpretation by respondents, as FAOL (AGI-like) should precede HLMI (ASI). The 2022 survey adjusted this, with HLMI at 2047 and FAOL at 2080, still showing HLMI earlier, which is counterintuitive (2016 Expert Survey, 2022 Expert Survey).

The surveys also asked about specific tasks, such as passing the Turing test, with a 2016 median of 2040 for a 50% chance, often used as a proxy for AGI in conversational ability.

Metaculus Community Prediction:

Metaculus, a prediction platform, has a community prediction for the date of artificial general intelligence, with a median estimate of 2030-05-13, ranging from 2026 to 2038. This is based on 1,485 forecasters and reflects a more optimistic view (Metaculus Prediction).

AGI Conference Surveys:

The AGI-11 survey, with 60 participants, found nearly half believed AGI would appear before 2030, and nearly 90% before 2100, with about 85% believing it would be beneficial. The AGI-09 survey had median dates for a 10%, 50%, and 90% probability of AI passing a Turing test at 2020, 2040, and 2075, respectively, with little change with additional funding (AGI-11 Survey, AGI-09 Survey).

Individual Expert Predictions

Individual predictions provide a broader spectrum, often more optimistic than survey medians:

- Elon Musk: In an interview on X, Musk predicted AI would surpass the intelligence of the smartest human by the end of 2025 or 2026, and within five years, exceed all humans, highlighting hardware and electricity supply as potential bottlenecks (Elon Musk’s Prediction).

- Masayoshi Son: The CEO of SoftBank predicted ASI, defined as 10,000 times smarter than human geniuses, within 10 years from 2024, around 2034, suggesting a rapid progression (Masayoshi Son’s Prediction).

- Nick Bostrom: A philosopher known for his work on superintelligence, in a 1997 analysis, predicted superintelligence before 2033, with less than 50% probability. In a 2024 interview with Tom Bilyeu, he stated AI timelines appear relatively short, suggesting we are far along the path to AGI, but specific years weren’t detailed. An X post attributed to him suggested a year or two away from the singularity, though this needs verification (Nick Bostrom’s Prediction).

Theoretical Frameworks and Intelligence Explosion

The concept of an intelligence explosion, first proposed by I. J. Good in 1965, suggests that once AGI is achieved, it could enter a positive feedback loop of self-improvement, leading to ASI rapidly. This is central to the technological singularity hypothesis:

- Some sources, like a Medium post by Michael Araki, claim the transition from AGI to ASI could be instantaneous in human terms, with AGI potentially self-improving in minutes due to its ability to enhance its algorithms (Medium Post on AGI to ASI).

- Others, like the LessWrong post on 3-year AGI timelines, suggest a “centaur period” of around 1 year after AGI, but the exact timeline to ASI remains unclear (LessWrong Post).

Analysis of Discrepancies

There are notable discrepancies in predictions, partly due to differing definitions:

- The AI Impacts surveys show HLMI (ASI) predicted earlier than FAOL (AGI-like), which is counterintuitive. This may reflect respondent interpretation, where HLMI is seen as achievable before full economic replacement, or definitional ambiguity.

- Early predictions, like Musk’s 2025-2026, contrast with conservative survey medians like 2047, highlighting optimism versus caution in the field.

Table of Key Predictions

| Source | Type | AGI Timeline (50% Chance) | ASI Timeline (50% Chance) | Notes |

|---|---|---|---|---|

| AI Impacts 2023 Survey | Survey | ~2047 (HLMI, ASI-like) | ~2047 | HLMI defined as better and cheaper than humans, may include ASI. |

| AI Impacts 2016 Survey | Survey | ~2040 (Turing Test) | ~2040 (HLMI) | FAOL at 2090, suggesting definitional confusion. |

| Metaculus Community | Community | ~2030 | N/A | Median date for AGI announcement, range 2026-2038. |

| Elon Musk | Individual | ~2025-2026 | ~2026-2030 | Predicts surpassing smartest human by 2025, all humans by 2030. |

| Masayoshi Son | Individual | N/A | ~2034 | Predicts ASI 10,000 times smarter than humans in 10 years from 2024. |

| Nick Bostrom (1997) | Individual | N/A | ~2033 | Less than 50% probability, outdated. |

Conclusion

Based on the analysis, AGI is expected around the 2040s, with a median estimate around 2040 based on Turing test proxies and earlier community predictions.

ASI, defined as surpassing human intelligence in all areas, is likely to follow shortly after, potentially by the mid-2040s to early 2050s, given the intelligence explosion hypothesis.

However, optimistic predictions like Musk’s 2025-2026 and Son’s 2034 suggest it could be sooner, while conservative surveys push timelines later.