At SQL Saturday Boston 2025, I gave a presentation on local LLMs and there was a great question that I wasn’t sure about. Someone asked about the download size vs the model size as listed on Hugging Face (or elsewhere). It was a good questions, and I assumed the parameter size (258M, 7B, etc.) relates to the download size or size on disk.

I did a few searches and this was a great article on LinkedIn, called The Intergalactic Guide to LLM Parameter Sizes. In it, there’s a quick guide:

- Tiny (1-3B parameters): 1-2GB on disk

- Small (4-8B parameters): 3-5GB

- Medium (10-15B): 8-15GB

- Large (30-70B): 20-40GB

- Enormous (100B-200B): 60-150GB

- Apocalypse-Inducing (500B+): 300GB+

That’s a good rule of thumb, and a good thing to know. Now, the storage isn’t that important, but the power consumption is something.

I have a laptop which has these specs:

- 12 core Ultra 7 Intel CPU, 2.1GHz (base)

- 32GM RAM

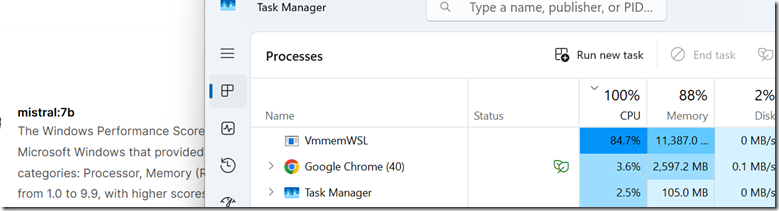

I asked a simple question of my local model (Ollama, mistral 7b), so a small model, and I see this in task manager

The power consumption in the guide says a small desk fan and any modern laptop can run it. I was playing with this on a plane and could see my battery going down. I don’t think that the storage is a problem on most modern laptops/desktops, though certainly you want a T/S/M/L model running. The power, however, can be a challenge, especially if you don’t have a good GPU. My laptop has an Intel NPU (AI Boost) designed to help AI stuff though I don’t know if the container uses it. I also have a GPU from Intel, but still, this GenAI stuff uses power.

Be careful on a laptop that isn’t plugged in if you need to get a lot of work done.