The landscape of data management has dramatically shifted from rigid, siloed systems to flexible, integrated platforms designed to handle the unprecedented volume, variety, and velocity of modern data. This evolution is driven by the increasing demand for real-time insights, advanced analytics, and sophisticated AI/ML capabilities across all business functions.

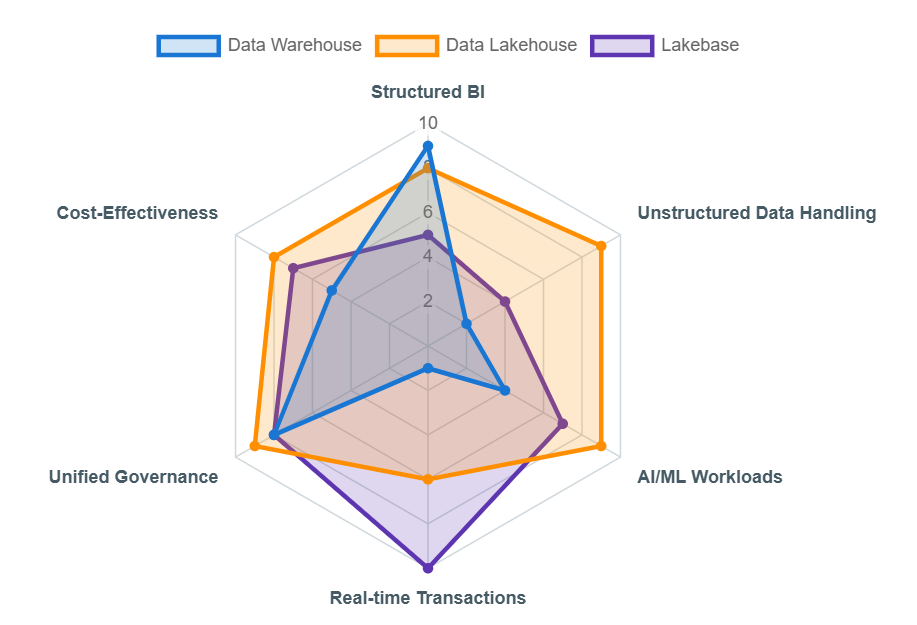

You may have read my previous post about lakebases, this post digs a little bit deeper into the modern Business Intelligence (BI) platform, delineating the roles and optimal applications of three pivotal architectural paradigms: the Data Warehouse (DW), the Data Lakehouse (DLH), and the emerging Lakebase. While Data Warehouses traditionally excel in processing structured data for conventional BI, Data Lakehouses offer a unified approach for managing both structured and unstructured data, thereby supporting a broader spectrum of analytics, including advanced AI/ML workloads. Lakebase further extends this integration by embedding high-throughput transactional capabilities directly within the lakehouse environment, blurring the lines between operational and analytical systems.

Strategic architectural decisions hinge on a precise understanding of specific workload requirements—ranging from low-latency operational transactions to complex historical analysis and real-time AI inference. This report provides a comprehensive framework for selecting the most appropriate architecture to maximize business value, operational efficiency, and future adaptability.

Introduction to Modern Data Architectures

Evolution from Traditional to Modern

Historically, organizations relied on siloed and fragmented data systems, which presented significant challenges for efficient data integration and analysis. These traditional architectures were inherently limited in their ability to handle the escalating volume, variety, and velocity of modern data. The sheer abundance and heterogeneity of data, encompassing structured, unstructured, and semi-structured formats, necessitated a departure from the rigid, schema-on-write approaches common in older data warehouses. This fundamental shift in the data landscape is the primary catalyst for the emergence of data lakes and, subsequently, data lakehouses. It signifies a profound paradigm shift in how businesses perceive and leverage data, moving beyond retrospective reporting to embrace proactive, predictive analytics and real-time operational intelligence. This evolution also necessitates a significant re-skilling and up-skilling of data professionals within organizations.

A modern data architecture is designed to overcome these limitations by providing a scalable, integrated, and governed approach to data management. This paradigm enables organizations to seamlessly move data between a central data lake and various purpose-built data services, ensuring that data is accessible and usable across diverse applications and analytical needs.

Core Components of a Modern Data Platform

A modern data platform, often conceptualized as a “data stack,” comprises several foundational layers that operate in concert to deliver end-to-end data capabilities. The success of such a platform is contingent not just on the individual capabilities of its components, but crucially on their seamless integration and sophisticated orchestration. This necessitates a holistic architectural approach, robust and automated data pipelines, and strong data governance to ensure data quality, consistency, and trustworthiness across the entire ecosystem. The recent trend towards unified data platforms, such as Databricks’ Lakehouse and Lakebase offerings, is a direct response to the inherent complexity and operational overhead of managing disparate tools for each component, aiming to simplify the data landscape.

- Data Ingestion: This is the initial process of collecting data from diverse sources, such as databases, SaaS applications, or files, and loading it into a designated target like a data lake or data warehouse. Ingestion can be executed in various modes, including batch, real-time, or stream processing. While real-time processing offers immediate data availability, it typically incurs higher costs due to the continuous monitoring of data sources.

- Data Storage and Processing: This layer is paramount for the effective and efficient utilization of data. It encompasses various data stores—including data warehouses, data lakes, and data lakehouses—each tailored to specific data characteristics and use cases.

- Data Transformation: This crucial stage involves refining raw data to align with desired business logic and enhance its utility for downstream applications. Techniques include data cleaning (identifying and correcting errors), data normalization (standardizing data to a common structure), data aggregation (combining multiple data points into summary statistics), and feature engineering (creating new variables to boost predictive power for machine learning algorithms). While traditional ETL (Extract, Transform, Load) processes were common, modern cloud environments increasingly favor ELT (Extract, Load, Transform) due to the cloud’s scalable processing power, allowing raw data to be loaded first and transformed as needed.

- Data Orchestration: This component ensures the smooth and automated flow of data throughout its entire lifecycle. Key elements include data integration, transformation workflow automation, data monitoring, robust error handling, and comprehensive data security.

- Data Governance: A critical aspect for securing, monitoring, and managing access to data in a unified and compliant manner. Effective governance involves maintaining consistent common vocabularies, curating data for optimal use, and establishing central frameworks for schema evolution, particularly vital for streaming use cases.

- Business Intelligence (BI) and Analytics/Data Visualization: This involves the visual representation of data and information through elements such as charts, graphs, maps, and dashboards to reveal insights and patterns. Analytics capabilities in modern architectures range from traditional data warehousing and batch reporting to real-time analytics, near real-time alerting, and advanced ML-based use cases.

- Data Observability: A newer, yet increasingly vital, layer focused on monitoring the health, performance, and reliability of the entire data platform. The interconnectedness of these components means that if problems occur during ingestion, for example, every subsequent step of the downstream analytical workflows might suffer. This highlights a systemic dependency where the failure or inefficiency of one component can propagate and negatively impact the entire data pipeline.

The Foundation: Data Warehouses

Characteristics and Traditional Use Cases

A data warehouse (DW) is fundamentally a system designed to collate data from a wide range of sources within an organization, serving as a centralized data repository primarily for analytical and reporting purposes. They are specifically engineered to manage organized data with well-defined use cases. Historically, traditional DWs were deployed on-premises, relying on physical hardware such as multiple servers, CPUs, and disks located in dedicated server rooms, requiring significant in-house IT teams for installation, maintenance, and cooling.

These systems are optimized for structured data, storing information in a relational format to support complex queries and Business Intelligence (BI). Data within a DW is typically organized into a star or snowflake schema, utilizing fact and dimension tables, and is designed through conceptual, logical, and physical data models. Traditional DWs are commonly structured in a three-tier architecture: a bottom-tier database server (typically an RDBMS) for data extraction, a middle-tier OLAP (Online Analytical Processing) server for multidimensional data analysis, and a top tier of querying and reporting tools for data analysis and BI. Primary use cases include enterprise data warehousing (EDW) for consolidating data from all subject areas across an enterprise, and supporting data analysis, data mining, AI, and machine learning efforts on structured data.

Limitations of Traditional Data Warehouses

Traditional DWs, built on outdated hardware and rigid architectures, increasingly struggle to handle the soaring volume, variety, and velocity of modern data. These limitations are not merely technical inconveniences; they represent fundamental architectural incompatibilities with the dynamic and expansive demands of the digital age.

- Scalability Constraints: On-premises infrastructure faces inherent limitations in supporting increasing data sources, users, and workloads. Once the system reaches its physical capacity, further scaling becomes impractical and prohibitively expensive.

- Performance Bottlenecks: As data volumes and user concurrency expand, query speeds decline significantly due to hardware limitations and the inability to dynamically allocate resources. This leads to performance degradation under heavy use.

- Latency Issues: Traditional DWs are heavily dependent on batch ETL (Extract, Transform, Load) processes, which introduce delays during data extraction and transformation, slowing data refresh rates. Furthermore, the ETL process often necessitates the rejection of some raw data that could potentially be valuable for future, unforeseen analysis.

- High Costs: Traditional solutions involve significant upfront capital expenditure for hardware and software licenses, compounded by ongoing maintenance costs for infrastructure, software, and dedicated IT staff. Long-term storage of historical data can also become prohibitively expensive.

- Data Silos: Despite being centralized, traditional DW implementations can still lead to data fragmentation across multiple independent data marts, hindering a holistic enterprise view.

- Limited Data Types: A primary disadvantage is their limitation to storing only structured and semi-structured data, severely restricting the types of data that can be included and analyzed.

The physical constraints and architectural inflexibility of traditional data warehouses, with their reliance on dedicated server rooms and fixed hardware, stand in stark contrast to the demands imposed by the “soaring volume, variety, and velocity of modern data”. This indicates a fundamental mismatch. For any organization experiencing significant data growth or diversification of data types, traditional on-premises data warehouses are no longer a viable long-term strategic solution.

Advantages of Cloud Data Warehouses

Cloud-based data warehouse solutions fundamentally leverage the power of cloud computing to offer significantly enhanced scalability, performance, and accessibility compared to their traditional counterparts. Cloud computing is more than just an alternative deployment model for data warehouses; it is the fundamental technological bedrock that enables the entire modern data architecture paradigm. Without the inherent elasticity, comprehensive managed services, and flexible cost models offered by cloud platforms, subsequent innovations such as data lakes and data lakehouses would be far less practical, if not entirely unfeasible, for the majority of organizations. This signifies a profound shift from a capital expenditure model to an operational expenditure model, and from IT-managed infrastructure to provider-managed services, thereby liberating internal teams to concentrate on extracting business value from data rather than being burdened by infrastructure upkeep.

- Scalability and Flexibility: Cloud DWs enable organizations to effortlessly scale their data systems to accommodate growing data needs.1 They provide instant and cost-effective scaling with cloud infrastructure, allowing organizations to quickly expand resources without a long-term commitment.

- Cost Efficiency: Cloud data warehouses typically operate on a pay-as-you-go model, which substantially reduces initial capital investments in hardware and software licenses, as well as ongoing maintenance costs. The adoption of serverless techniques can further optimize and lower total costs.

- Optimized Performance: Cloud resources dynamically scale to provide optimized performance. They enable instant data processing through streaming ingestion pipelines and achieve faster query performance via parallel processing and columnar storage. Cloud DWs can deliver sub-second query performance and handle thousands of concurrent queries per second, preventing delays that hinder productivity.

- Managed Services: Cloud data warehouses are hosted and fully managed by cloud service providers, thereby eliminating the need for in-house hardware management and maintenance. Automatic updates and security patches are handled by the providers, ensuring access to the latest features and security measures.

- Accessibility and Collaboration: Data stored in cloud data warehouses can be accessed from anywhere with an internet connection, significantly facilitating remote work and enhancing global team collaboration.

- Unified Data Storage: Cloud data warehouses help overcome data fragmentation by providing unified data storage in one centralized cloud platform.

- Robust Security and Compliance: Cloud providers offer enterprise-grade security features, including encryption at rest and in transit, fine-grained access controls, and adherence to various compliance certifications, often surpassing the security capabilities of on-premises systems.

Embracing Flexibility: Data Lakes

Concept and Purpose

Data lakes represent a pivotal component of modern data architecture, serving as a centralized repository designed to store vast amounts of both structured and unstructured data. A key differentiator from traditional data warehouses is their ability to allow organizations to store data in its raw, native form, without the necessity for extensive upfront data transformation. This inherent flexibility facilitates the capture and storage of data from a multitude of diverse sources. Data lakes are typically built upon inexpensive object storage platforms, such as Amazon S3, making them cost-effective for large volumes.

Advantages

- Cost-Effectiveness: They offer a lower-cost storage environment, particularly advantageous for housing petabytes of raw data.

- Flexibility for Diverse Data: Data lakes can store all types of data—structured, unstructured, and semi-structured—in various native formats. This versatility empowers researchers and data scientists to work with a broad and diverse range of data more easily.

- Support for Unknown Future Uses: The ability to store data in its raw form provides significant flexibility for unforeseen analytical needs or future use cases that may not be immediately apparent.

- Removes Data Silos: By centralizing data from disparate sources, data lakes effectively help to break down organizational data silos, leading to improved decision-making and more comprehensive insights.

- AI and ML Support: Data lakes are crucial for a modern data strategy, enabling organizations to capture large amounts of raw data in real-time from diverse ML and IoT devices. This raw data is essential for machine learning and artificial intelligence, helping organizations predict future events and build intelligence into their systems. While the provided information does not explicitly detail feature engineering within data lakes, the fundamental ability to store raw, diverse data is a prerequisite for such processes.

Potential for “Data Swamps”

The primary challenge with data lakes lies in their inherent flexibility: without proper governance, metadata management, and organization, they can easily become disorganized and devolve into a “dreaded, inefficient data swamp” where extracting useful information becomes exceedingly difficult. Due to the raw and potentially unstructured nature of the data, data lakes often require advanced tools and specialized skills for effective data querying and analysis.

The data lake represents a crucial evolutionary step in democratizing data access and enabling novel forms of analytics, particularly in AI and ML, which often necessitate access to raw, diverse datasets. However, its emergence also starkly highlights the critical importance of robust data governance and metadata management. Without a comprehensive framework for cataloging, understanding, and curating the vast amounts of raw data, the transformative promise of the data lake can quickly turn into a significant organizational liability. This inherent challenge directly paved the way for the development of the data lakehouse, which attempts to impose order and structure upon the flexibility of the data lake.

The Unified Approach: Data Lakehouses

Combining Strengths of Data Lakes and Data Warehouses

A data lakehouse represents a significant architectural evolution, combining the capabilities of traditional data warehouses and modern data lakes into a single, cohesive data management solution. Its fundamental goal is to address the limitations inherent in both previous paradigms, offering a truly unified approach to data storage and processing. The data lakehouse architecture blends the flexibility, open format, and cost-effectiveness characteristic of data lakes with the accessibility, robust management features, and advanced analytics support typically found in data warehouses.

The data lakehouse is consistently described as an architecture that combines the “flexibility, open format, and cost-effectiveness of data lakes” with the “accessibility, management, and advanced analytics support of data warehouses”. It is explicitly stated to address the limitations of both preceding architectures. The critical enabling technology for this convergence is Delta Lake, which brings traditional data warehouse-like features (such as ACID transactions and implicit schema enforcement) to the cost-effective, flexible storage environment of data lakes.

The data lakehouse represents a significant maturation of the data architecture landscape. It acknowledges that neither the rigid structure of a data warehouse nor the unbridled flexibility of a raw data lake is sufficient on its own to meet the diverse and evolving data needs of modern enterprises. Its emergence signifies a strong industry trend towards unification and simplification of the data stack, aiming to reduce operational complexity and improve data accessibility for a much wider range of use cases, from traditional business intelligence to cutting-edge artificial intelligence. This also implies a potential consolidation of tooling and a reduction in the overall data engineering overhead.

Key Features and Advantages

- Unified Data Storage: A data lakehouse can store unlimited amounts of both structured and unstructured data in a single, centralized location, without the limitations often found in other systems. It effectively acts as a single-view repository for all types of organizational data.

- ACID Transactions via Delta Lake: A cornerstone of the lakehouse architecture is Delta Lake, an optimized storage layer that provides ACID (Atomicity, Consistency, Isolation, Durability) transactions for big data workloads. This capability is crucial for ensuring data reliability, preventing data corruption, and maintaining data integrity, making it the default storage format in modern lakehouse implementations like Fabric.

- Schema Enforcement and Evolution: The concept of “validated data” in the silver layer and “refined data” in the gold layer of the Medallion Architecture (discussed below) inherently implies a robust level of schema management and enforcement as data progresses through the pipeline. Delta Lake’s internal structure and transaction logs also contribute to faster read queries, increased data freshness, and the ability to perform data rollbacks.

- Cost-Effective Scalability: By leveraging cloud object storage, data lakehouses provide a cost-effective solution for storing large volumes of new data in real-time, offering scalability without the prohibitive costs associated with traditional data warehouses.

- Broad Analytics Support: Data lakehouses are designed to accommodate a wide range of analytical needs, from traditional Business Intelligence (BI) to sophisticated AI and Machine Learning (ML)-driven analytics. They empower data scientists to quickly extract insights from raw data using advanced AI tools and support both prescriptive analytics (akin to data warehouses) and predictive analytics (akin to data lakes).

- Reduced Data Movement and Duplication: A core objective of the lakehouse paradigm is to minimize data movement and duplication by striving to store only one copy of data. This is further enhanced by the ability to use shortcuts to reference data stored in other locations rather than physically copying it.

- Open Format: Data within a lakehouse is typically stored in an open format, ensuring interoperability. This allows the data to be queried by various analytical engines (e.g., Power BI, T-SQL, Apache Spark) and accessed by non-Fabric applications via standard APIs and SDKs.

Vendor Specific Lakehouse Implementations

Leading cloud providers and data platforms have embraced the lakehouse paradigm, offering their own integrated solutions:

- Databricks Lakehouse Platform: Pioneered by Databricks, their Lakehouse Platform is built on Delta Lake, providing a unified platform for data engineering, machine learning, and BI. It offers a platform experience that allows enterprises to adapt to open-source Delta Lake architecture.

- Microsoft Fabric Lakehouse: Microsoft Fabric provides a data architecture platform for storing, managing, and analyzing structured and unstructured data in a single location. It combines the scalability of a data lake with the performance and structure of a data warehouse. When a Lakehouse is created in Fabric, a SQL analytics endpoint is automatically generated, providing a read-only, relational interface over Delta tables using T-SQL. Fabric’s OneLake serves as the data lake, aiming to remove silos, reduce data movement and duplication, and support open formats (like Delta and Apache Iceberg) for various analytical engines. Snowflake has also added the ability to write Iceberg tables directly to OneLake.

- Snowflake Data Cloud (Lakehouse Analytics): Snowflake‘s Data Cloud is a self-managed platform that supports data storage, processing, and analytics. It uses a hybrid architecture with separated storage and compute (virtual warehouses) and is designed for fast query performance on open table formats, including Apache Iceberg and Delta tables, with zero data movement. Snowflake allows connecting data silos across architectures without data movement, simplifying the data lifecycle and enabling advanced analytics on diverse data types. It provides robust governance through Snowflake Horizon Catalog and supports building transactional data lakehouse patterns for unified analytics and AI/ML workloads.

- AWS Modern Data Architecture (Lakehouse): AWS‘s modern data architecture allows building a scalable data lake (on Amazon S3) and leveraging a broad collection of purpose-built data services for analytics and machine learning. AWS SageMaker Lakehouse unifies access to data across Amazon S3 data lakes and Amazon Redshift data warehouses. It supports “zero-ETL” integrations to bring transactional data from operational databases (like Amazon RDS and Amazon Aurora) into the lakehouse in near real-time, exposing it via Apache Iceberg APIs for comprehensive analysis. AWS Glue Data Catalog provides a uniform repository for metadata and a centralized framework for schema management.

- Google Cloud Data Lakehouse: Google Cloud offers a cloud-native, scalable, and secure data lakehouse solution. It combines low-cost cloud object storage with serverless compute engines and powerful data management offerings. The lakehouse integrates metadata layers over raw data storage to provide warehouse-like capabilities such as structured schemas, ACID transactions, and data governance. It supports diverse workloads including analytics, SQL, machine learning, and data science from the same repository. Key components include Cloud Storage, BigQuery, Dataproc, Dataflow, Dataplex, and Vertex AI.

Data Warehouse vs. Data Lake vs. Data Lakehouse

| Characteristic | Data Warehouse | Data Lake | Data Lakehouse |

| Primary Data Types Handled | Structured, Semi-structured | Raw (Structured, Unstructured, Semi-structured) | All (Structured, Unstructured, Semi-structured) |

| Schema Approach | Schema-on-Write (pre-defined) | Schema-on-Read (flexible) | Flexible (Schema-on-Read with enforcement capabilities via Delta Lake) |

| Storage Cost | High (for large volumes) | Low (object storage) | Moderate (cost-effective object storage) |

| Query Performance (Typical) | High (for structured queries) | Variable (requires advanced tools) | High (for diverse queries) |

| Data Quality & Reliability | High (governed) | Low (potential for “swamp”) | High (ACID, Medallion layers) |

| Primary Use Cases | Traditional BI, Reporting, Historical Analysis | Data Science, Exploration, Big Data Processing | BI, AI/ML, Advanced Analytics, Real-time |

| Data Governance | Strong (centralized) | Weak (prone to disorganization) | Strong (unified, e.g., Unity Catalog, Medallion) |

| ETL/ELT Approach | ETL | ELT | ELT |

| Native AI/ML Support | Limited/Indirect | Strong (raw data access, model training) | Strong (native, integrated) |

The Medallion Architecture (Bronze, Silver, Gold Layers) for Data Quality and Governance

The medallion lakehouse architecture is a widely recommended design pattern for logically organizing data within a lakehouse environment. Its core objective is to incrementally and progressively improve the structure, quality, and usability of data as it flows through distinct layers. This structured progression is key to ensuring data reliability and auditability.

The Medallion Architecture is far more than just a data organization pattern; it functions as a critical governance framework within the lakehouse environment. It directly addresses the “data swamp” problem often associated with raw data lakes by imposing a structured, quality-driven pipeline for data refinement. This multi-layered approach ensures data reliability, auditability, and usability for a diverse range of consumers, from data scientists requiring access to raw data to business analysts who need highly curated, performant datasets for reporting. By systematically refining data, it establishes a “single source of truth” , which is absolutely essential for fostering trust in analytical outputs and AI models.

- Bronze Layer (Raw Zone): This initial layer stores source data in its original, raw format, accommodating unstructured, semi-structured, or structured data types. Data in this layer is typically append-only and immutable, serving as a foundational source of truth that enables future reprocessing and auditing. It captures data “as-is” along with any relevant metadata.

- Silver Layer (Enriched Zone): Data in this layer is sourced from the Bronze layer and undergoes cleansing, standardization, and initial structuring into tables (rows and columns). It may also be integrated with other datasets to provide a unified “Enterprise view” of key business entities, such as master customers, products, and non-duplicated transactions. This layer is designed to enable self-service analytics and serves as a primary source for further refinement in the Gold layer. Only minimal or “just-enough” transformations are applied at this stage to maintain agility.

- Gold Layer (Curated Zone): This final layer stores data sourced from the Silver layer, which has been highly refined and aggregated to meet specific downstream business and analytical requirements. Tables in the Gold layer typically conform to star schema designs, optimized for high performance and usability for Business Intelligence (BI) and Machine Learning (ML) applications. More complex, project-specific transformations and business rules are applied during the loading of data from Silver to Gold.

In a typical implementation, each zone should ideally be separated into its own lakehouse or data warehouse within a unified data lake like OneLake, facilitating controlled data movement and transformation between zones.

Operationalizing Data: Lakebase (OLTP on the Lakehouse)

Introduction to Lakebase

The concept of “Lakebase” refers to an Online Transaction Processing (OLTP) database engine that is deeply integrated with a data lakehouse, designed to handle low-latency, high-concurrency transactions for operational applications while seamlessly syncing with analytical workflows.

Databricks Lakebase (PostgreSQL)

Databricks Lakebase is a fully managed OLTP database engine built directly into the Databricks Data Intelligence Platform, leveraging a PostgreSQL foundation. It allows organizations to create and manage OLTP databases directly on Azure Databricks, utilizing Databricks-managed storage and compute resources.

Microsoft Fabric SQL Database (SQL DB in Fabric)

Microsoft Fabric’s SQL database is a developer-friendly transactional database, based on Azure SQL Database, designed as the home for OLTP workloads within Fabric. It automatically replicates data into OneLake in near real-time, converting it to Parquet in an analytics-ready format. This enables downstream scenarios like data engineering, data science, and Power BI reporting. It uses the same SQL Database Engine as Azure SQL Database and supports intelligent performance features like automatic index creation. SQL database in Fabric allows cross-database queries, joining data from other SQL databases, mirrored databases, and warehouses within a single T-SQL query. This feature is currently in preview.

Bridging the Gap between OLTP and OLAP Workloads

For decades, OLTP systems, characterized by fast, row-level transactions, and OLAP systems, designed for large-scale analytical queries, have operated in separate, distinct environments. This traditional separation inherently created data silos between transactional and analytical data, leading to complex data movement and potential staleness.

Lakebase solutions fundamentally “collapse this long-standing wall between OLTP and analytics”. By fusing transactional database semantics with lakehouse governance, they enable organizations to run high-throughput, low-latency transactional workloads while simultaneously keeping this operational data in real-time synchronization with the analytical Lakehouse environment. This deep integration allows for the seamless combination of operational, analytical, and AI workloads without the need for custom, often brittle, ETL pipelines, thereby significantly reducing application complexity.

The historical architectural separation of OLTP and OLAP systems has been a major impediment to achieving true real-time intelligence within enterprises. This separation often necessitated complex, brittle ETL processes, introduced data staleness, and made it exceedingly difficult to build truly real-time intelligent applications. Lakebase’s emergence signifies a critical leap towards a genuinely unified data platform where operational data is immediately available for comprehensive analytics and AI, and conversely, analytical insights can directly inform and influence operational systems. This capability unlocks transformative use cases such as real-time fraud detection, hyper-personalized recommendations based on live customer behavior, and dynamic pricing adjustments, which were previously either technically challenging or economically unfeasible. It streamlines the entire data lifecycle, drastically reduces data latency, and empowers developers to build sophisticated “intelligent applications” with unprecedented efficiency.

Key Features of Lakebase Solutions

- Fully Managed and Serverless: Both Databricks Lakebase and Microsoft Fabric SQL DB are fully managed, handling all aspects of storage, compute, provisioning, and scaling, removing significant operational burden. They leverage architectures that separate compute and storage, enabling independent scaling while supporting ultra-low latency and high concurrency transactions.

- PostgreSQL/SQL Compatibility: Databricks Lakebase offers standard PostgreSQL semantics, including support for row-level transactions, indexes, and access via JDBC/psql drivers, along with

pgvectorfor GenAI/RAG agents. Microsoft Fabric SQL DB uses the Azure SQL Database Engine, providing T-SQL compatibility. - Copy-on-Write Branching (Databricks): Built on Neon technology, Databricks Lakebase introduces “copy-on-write” branching, allowing for instant, zero-copy clones of databases (branches). These branches are lightweight, operate independently, and are economically efficient, ideal for isolated development, testing, or point-in-time recovery.

- Real-time Sync with Lakehouse: Both solutions provide managed Change Data Capture (CDC) into their respective lakehouse environments (Delta Lake for Databricks, OneLake for Fabric), ensuring transactional rows are kept in real-time synchronization with BI models and the broader analytical layer. This simplifies data synchronization between OLTP and OLAP workloads.

- Unified Governance: Databricks Lakebase integrates with Unity Catalog for consistent security policies and access control across OLTP and OLAP data. Microsoft Fabric SQL DB integrates with OneLake’s centralized data governance, allowing data to be shared and accessed across Fabric items.

- High Availability: Both offer multi-zone high availability to protect against zonal failures, with Databricks Lakebase supporting readable secondaries for read workload scaling.

Lakebase vs. Traditional OLTP Databases

| Characteristic | Traditional OLTP (e.g., PostgreSQL) | Databricks Lakebase | Microsoft Fabric SQL DB |

| Integration with Analytics/AI | Separate systems, often requiring custom integration | Natively integrated with Lakehouse platform | Natively integrated with Fabric Lakehouse/OneLake |

| Data Sync for Analytics | Requires complex ETL/CDC pipelines for analytical sync | Real-time sync with Delta Lake via managed CDC (simplified) | Automatic near real-time replication to OneLake |

| Management & Operations | Manual provisioning, scaling, maintenance (high operational burden) | Fully managed, serverless, decoupled compute/storage, instant provisioning (low operational burden) | Fully managed, based on Azure SQL DB, easy to configure |

| Scalability Model | Primarily vertical scaling (limited elasticity) | Horizontal scaling (read replicas, HA configurations) | Scalable with Fabric capacity, supports cross-database queries |

| Development Workflow | Traditional database development, often siloed | AI-native branching (DevEx), ephemeral environments | Integrated with Fabric data engineering, data science, notebooks |

| Governance Model | Database-specific roles & privileges | Unified governance via Unity Catalog (cross-platform) | Unified governance via OneLake catalog |

| Cost Model | Upfront capital costs, fixed operational costs | Pay-as-you-go (cost-effective, elastic) | Simplified billing in single capacity |

| Key Differentiator | Optimized purely for transactional workloads | Unifies OLTP and OLAP on a single platform, built for intelligent applications | Home for OLTP in Fabric, integrated end-to-end analytics |

This comparison starkly contrasts Lakebase’s modern, integrated approach with the more traditional, often siloed nature of standalone OLTP databases. It highlights Lakebase’s unique value proposition, particularly its “AI-native” features, seamless integration with the broader lakehouse ecosystem, and operational efficiencies. For a technical leader, this comparative analysis is invaluable in evaluating whether to adopt an innovative, integrated solution like Lakebase or to continue relying on traditional OLTP databases, based on critical factors such as operational overhead, real-time analytical needs, strategic AI initiatives, and overall platform consolidation goals.

When to Use Each Architecture

The selection of the optimal data architecture is not a one-size-fits-all decision; rather, it is a strategic choice that must align with an organization’s specific business requirements, the characteristics of its data, and the nature of its anticipated workloads.

Data Warehouse (Cloud-based)

A cloud-based data warehouse is ideal for organizations primarily dealing with highly structured and semi-structured data that requires high-performance Business Intelligence (BI) and standardized reporting. It is particularly well-suited for well-defined analytical use cases where data is consistently transformed before loading (via ETL or ELT processes). Cloud data warehouses excel in historical analysis and generating aggregated views for executive dashboards. While cloud DWs offer significant scalability and cost benefits over their traditional on-premises counterparts, they can still be more expensive for storing vast quantities of raw data compared to data lakes. Moreover, they may struggle to efficiently handle the sheer variety and volume of unstructured data that is increasingly prevalent in modern enterprises.

- Amazon Redshift (AWS): A fully managed, petabyte-scale cloud data warehouse service that is part of AWS’s broad portfolio of analytics services.

- Snowflake: While often positioned as a data lakehouse, Snowflake also functions as a highly scalable cloud data warehouse, optimized for structured and semi-structured data, with strong BI and reporting capabilities.

- Google BigQuery (GCP): A fully managed, serverless data warehouse that enables scalable analysis of petabytes of data using SQL. It can query data directly from Cloud Storage, making it a key component for analytical workloads.

- Microsoft Fabric Data Warehouse: Within Microsoft Fabric, a data warehouse can be created in OneLake, often used for the Gold layer of a medallion architecture, optimized for BI and reporting.

Data Lakehouse

The data lakehouse is the preferred choice for organizations that need to handle a vast and diverse array of data types—structured, semi-structured, and unstructured—in a unified, cost-effective, and scalable manner.4 It is optimal for scenarios requiring both traditional BI capabilities and advanced analytics, including comprehensive AI and Machine Learning (ML) model training and serving. The lakehouse is particularly valuable when there is a need to combine historical data analysis with the flexibility to explore raw data for new, unforeseen analytical opportunities. Its ability to provide ACID transactions and schema enforcement via Delta Lake makes it a robust platform for data quality and reliability, addressing the “data swamp” issues often associated with pure data lakes. Organizations that prioritize a single source of truth for all data, reduced data movement, and simplified governance across diverse workloads will find the lakehouse approach highly beneficial.

- Databricks Lakehouse Platform: A leading proponent of the lakehouse architecture, built on Delta Lake, offering a unified platform for data engineering, ML, and BI.

- Microsoft Fabric Lakehouse: Provides a unified platform for structured and unstructured data, with automatic SQL analytics endpoints and integration with OneLake for open formats and reduced data movement.

- Snowflake Data Cloud (Lakehouse Analytics): Offers powerful lakehouse analytics on open table formats (Iceberg, Delta), allowing users to connect data silos with zero data movement and perform advanced analytics and AI/ML.

- AWS SageMaker Lakehouse: Unifies access to data across S3 data lakes and Redshift data warehouses, supporting zero-ETL integrations from operational databases and leveraging Apache Iceberg for open access.

- Google Cloud Data Lakehouse: Leverages Cloud Storage, BigQuery, and other services to provide a scalable, secure data lakehouse solution with structured schemas, ACID transactions, and governance over diverse data types.

- Oracle Modern Data Platform: Combines Oracle transactional databases, data lake (OCI Object Storage), data warehouse (Autonomous Data Warehouse), and OCI AI/ML services to provide a comprehensive data management solution, supporting a data lakehouse approach for business analysis and machine learning.

Lakebase (OLTP on the Lakehouse)

Lakebase is specifically designed for organizations that require real-time operational data processing (OLTP) seamlessly integrated with their analytical and AI workloads within a unified data platform. It is the optimal choice for applications demanding low-latency, high-throughput transactional capabilities, such as customer-facing applications requiring up-to-date information, online feature stores for real-time AI inference, or enterprise transactional workloads that need reliable, concurrent processing. Lakebase eliminates the traditional silos between operational and analytical data, allowing for real-time insights by analyzing transactional and historical data together. This is crucial for use cases like instant fraud detection, personalized product recommendations based on live shopping cart contents, or automated market trading driven by streaming data.

- Databricks Lakebase (PostgreSQL): A fully managed PostgreSQL OLTP engine natively integrated into the Databricks Lakehouse Platform, providing transactional strength with lakehouse elasticity, analytics, and governance.

- Microsoft Fabric SQL Database: A developer-friendly transactional database based on Azure SQL Database, serving as the home for OLTP workloads in Fabric, with automatic near real-time replication to OneLake for analytics. It’s worth noting that Fabric’s Lakehouse SQL Endpoint is read-only. If you’re wanting to write back data to the Lakehouse using a SQL Endpoint this cannot be done; However, a Fabric Warehouse SQL Endpoint allows DML.

- Google Cloud AlloyDB: A PostgreSQL-compatible database designed for demanding enterprise OLTP workloads. It offers built-in analytics capabilities (HTAP) and can stream data or use federated queries with BigQuery and Vertex AI for real-time insights. Google Cloud Spanner is another highly scalable, globally distributed transactional database that can be integrated with BigQuery for analytical queries.

- AWS Purpose-Built Databases: While AWS doesn’t use the term “Lakebase” directly, its modern data architecture emphasizes purpose-built databases (like Amazon RDS, Amazon Aurora for relational OLTP, and various NoSQL options) that integrate with the data lake (S3) and data warehouse (Redshift) via services like AWS Glue and zero-ETL integrations to enable operational analytics and feed data into the lakehouse for unified access.

- Snowflake: Primarily an OLAP system, Snowflake is not designed for direct OLTP workloads. However, it integrates with external OLTP databases via ETL/ELT pipelines (e.g., Snowpipe for continuous loading) to pull transactional data for analytical processing.

- Oracle Modern Data Platform: Integrates Oracle transactional databases with its data lake and data warehouse components, enabling live data analysis by integrating transactional and analytical solutions for near real-time insights.

Decision Framework: Optimal Architecture for Specific Workloads

| Workload Type | Data Warehouse (Cloud) | Data Lakehouse | Lakebase (OLTP on Lakehouse) |

| High-Volume Transactions | Not suitable (OLAP-focused) | Not primary focus (analytical) | Designed for high-throughput, low-latency OLTP |

| Historical Analysis | Optimized for structured, historical BI & reporting | Excellent: Combines structured BI with raw data history | Can feed historical data to Lakehouse for analysis |

| Real-time Analytics | Good (with streaming ingestion) | Excellent: Supports real-time data processing and analytics | Excellent: Operational data immediately available for analytics |

| AI/ML Model Training | Limited (structured data only) | Ideal for diverse, raw data, feature engineering | Can capture feedback/operational data for training |

| AI/ML Real-time Inference | Limited | Good (with proper serving layers) | Online Feature Store, RAG agents, serving low-latency features |

| Standardized Reporting/BI | Highly optimized for structured BI | Excellent: Supports traditional BI on curated data (Gold layer) | Can be source for BI via Lakehouse sync |

| Ad-hoc Data Exploration | Limited (schema-on-write) | Excellent: Flexible schema-on-read for raw data | Not primary focus (transactional) |

| Custom Applications | Requires separate OLTP DB, complex integration | Supports analytical components of apps | Manages application state, data serving, intelligent apps |

| Unstructured Data Analysis | Not suitable | Handles all data types natively | Not primary focus (transactional) |

Integrating Common Tasks and Workloads

Modern data architectures are designed to support a wide array of enterprise tasks, moving beyond simple reporting to encompass advanced analytics and intelligent applications. The choice of architecture significantly influences how seamlessly these tasks are integrated and performed.

AI and Machine Learning

AI and ML are critical for a modern data strategy, enabling organizations to predict future events and embed intelligence into their systems and applications.

- Data Warehouses: While traditional data warehouses can support some AI/ML workloads, they are primarily limited to structured data. Feature engineering and model training on unstructured or semi-structured data would necessitate moving data to other platforms, introducing complexity and latency. Modern cloud data warehouses like Snowflake also offer AI capabilities, such as Snowflake Cortex AI for pre-built models and LLM functions, and Snowpark ML for custom model building within the platform.

- Data Lakehouses: The data lakehouse is uniquely positioned to support comprehensive AI/ML workflows. Its ability to store all forms of raw and structured data from diverse sources makes it ideal for data preparation and feature engineering. Data scientists can access vast amounts of raw data directly from the Bronze layer, cleanse and transform it in the Silver layer, and create highly curated datasets for model training in the Gold layer. This unified environment allows for quick extraction of insights from raw data using advanced AI tools.

- Databricks: Offers a unified platform for data engineering, machine learning, and BI, with native support for AI/ML workflows.

- Microsoft Fabric: Provides AI functions for data engineering (summarization, classification, text generation) and integrates FLAML for hyperparameter tuning. Data Science in Fabric supports end-to-end data science workflows from exploration to model serving.

- Snowflake: Offers Snowflake Cortex AI for pre-built models and LLM functions (e.g., text summarization, sentiment analysis, natural language queries, Document AI, forecasting, anomaly detection) and Snowpark ML for building, training, and deploying custom ML models using Python directly within Snowflake. It also supports developing AI data agents and defining ML workflows.

- AWS: Offers a broad and deep set of machine learning services (AI services, ML services, ML frameworks and infrastructure) to help organizations predict future events and build intelligence into their systems and applications. AWS SageMaker Lakehouse supports AI initiatives with unified data access.

- GCP: Leverages Vertex AI for machine learning, allowing users to integrate ML models directly with data in BigQuery and other data lake components.

- Oracle: The Oracle Modern Data Platform includes OCI AI/ML services to enrich data with embedded intelligence and surface insights directly inside applications.

- Lakebase: Lakebase extends the AI/ML capabilities of the lakehouse by providing a transactional layer for real-time inference and feedback loops. It can serve low-latency features for real-time inference and write model feedback directly back to the lakehouse. This integration is crucial for building intelligent applications and RAG agents that require up-to-the-second structured data to ground LLM prompts. The seamless sync with Unity Catalog managed tables (Databricks) or OneLake (Fabric) allows for fast and easy combination of operational, analytical, and AI workloads without custom ETL pipelines. Google Cloud’s AlloyDB also offers a fully featured vector database (AlloyDB AI) for GenAI agents and apps, with faster vector queries and integration with Vertex AI.

Business Intelligence and Reporting

BI and reporting involve the visual representation of data to reveal insights and patterns over time.

- Data Warehouses: Traditional and cloud data warehouses are purpose-built for BI and reporting on structured data. They provide highly optimized environments for complex SQL queries and are the backbone for dashboards and standard analytical reports.

- Data Lakehouses: Data lakehouses support traditional BI by providing highly refined and aggregated data in the Gold layer, often conforming to star schema designs optimized for performance and usability. This allows organizations to leverage data lakehouses for both traditional BI and more advanced analytics, providing a single source of truth for reporting.

- Microsoft Fabric Lakehouse: Automatically generates a SQL analytics endpoint for T-SQL queries, enabling BI tools like Power BI to connect directly to the lakehouse.

- Snowflake: Ensures consistent results across BI platforms and eliminates data extracts for truly interactive insights.

- AWS: Provides purpose-built analytics services, including Amazon Redshift, for traditional data warehousing and batch reporting, as well as real-time analytics for dashboards.

- GCP: BigQuery is used for powerful analytics and can be integrated with visualization tools to provide insights.

- Lakebase: While not a primary BI tool itself, Lakebase solutions can feed real-time operational data into the lakehouse environment, which then becomes available for near real-time BI and reporting. This allows dashboards to reflect the most current transactional data, enabling instant decision-making. Google Cloud’s AlloyDB, with its built-in columnar engine, is ideal for real-time BI dashboards.

Custom Applications (Real-time and Analytical Workloads)

Modern applications often require a blend of low-latency operational data and historical analytical insights.

- Data Warehouses: Building custom applications with traditional data warehouses for real-time workloads is challenging due to their OLAP-centric design and latency issues. They are better suited for batch analytical workloads that inform application logic rather than directly powering real-time features.

- Data Lakehouses: Data lakehouses provide the foundation for analytical workloads within custom applications, allowing developers to access and process large volumes of diverse data. They enable a holistic view of data, facilitating seamless data flow and integration between different systems.

- Snowflake: Supports building, distributing, and commercializing scalable, data-intensive applications, and allows for easy integration of data and apps in client environments.

- AWS: Its modern data architecture supports purpose-built databases for modern applications and allows for building real-time analytics and ML-based use cases.

- GCP: Dataflow is used for real-time ETL pipelines, and BigQuery can be used for real-time analytics, supporting custom applications.

- Lakebase: Lakebase solutions are game-changers for custom applications requiring both real-time and analytical workloads. They allow organizations to serve up-to-date information quickly to users in apps and dashboards, making their experience smoother and more responsive. They can manage application state in a transactional data store, serve featurized data at low latency to models, and enable integrated analytics by capturing and analyzing transactional and historical data together. This empowers the creation of intelligent applications that consume features or predictions generated in the lakehouse and update the analytical layer with fresh operational data, all within a unified platform. Examples include e-commerce applications with personalized recommendations, healthcare systems managing clinical trial data, or manufacturing solutions tracking IoT data for automated maintenance. Google Cloud’s AlloyDB is designed for demanding enterprise workloads and can accelerate agent and app development, supporting real-time business intelligence dashboards and fraud detection.

Summing it all up

The evolution of data architectures reflects a continuous pursuit of greater flexibility, scalability, and real-time intelligence. Traditional data warehouses, while foundational for structured BI, faced inherent limitations in handling the burgeoning volume and variety of modern data. The advent of cloud data warehouses significantly alleviated these constraints by offering elastic, cost-effective, and managed solutions.

Data lakes emerged as a response to the need for storing all data types in their raw form, enabling broad data exploration and foundational support for AI/ML. However, their inherent lack of structure presented challenges, leading to the potential for “data swamps.”

The data lakehouse represents a pivotal architectural convergence, successfully blending the strengths of data lakes (flexibility, cost-effectiveness, diverse data types) with those of data warehouses (structure, governance, performance for BI). Technologies like Delta Lake, with its ACID transactions and schema capabilities, are central to the lakehouse’s ability to provide a reliable, single source of truth for both traditional analytics and advanced AI/ML workloads. The Medallion Architecture further reinforces this by providing a systematic approach to data quality and governance across raw, cleansed, and curated layers. Leading cloud providers like Microsoft, AWS, Google Cloud, and data platforms like Snowflake and Oracle have all developed their own robust lakehouse offerings, demonstrating the widespread adoption and strategic importance of this paradigm.

The introduction of Lakebase solutions (such as Databricks Lakebase and Microsoft Fabric SQL DB) marks the latest significant step in this architectural evolution, directly addressing the historical separation between operational (OLTP) and analytical (OLAP) workloads. By embedding fully managed transactional engines directly within the lakehouse environment, these solutions enable real-time synchronization of operational data with analytical platforms. This unification streamlines data pipelines, reduces latency, and unlocks the potential for truly intelligent, real-time applications that can both consume and generate insights within a single, governed ecosystem. Other providers like Google Cloud (AlloyDB, Spanner) and AWS (purpose-built databases with zero-ETL integrations) also offer robust solutions for integrating transactional data with their analytical platforms, achieving similar outcomes.

The trend is clear: organizations are moving towards unified data platforms that minimize data movement, reduce operational complexity, and accelerate the path from raw data to actionable intelligence and AI-driven applications. Strategic architectural decisions will increasingly favor solutions that offer this holistic capability, allowing data professionals to focus less on infrastructure plumbing and more on extracting transformative value from their data assets. The future of data architecture lies in seamless integration, real-time capabilities, and native support for AI across the entire data lifecycle.

In a future post, we’ll dive into creating a sample solution with Microsoft Fabric and follow up with AWS and GCP. Be sure to check out my other posts at SQLTechBlog.com.

SQLTechBlog.com 2025 ©